Stable Diffusion

This is based on official stable diffusion repository CompVis/stable-diffusion. We have kept the model structure same so that open sourced weights could be directly loaded. Our implementation does not contain training code.

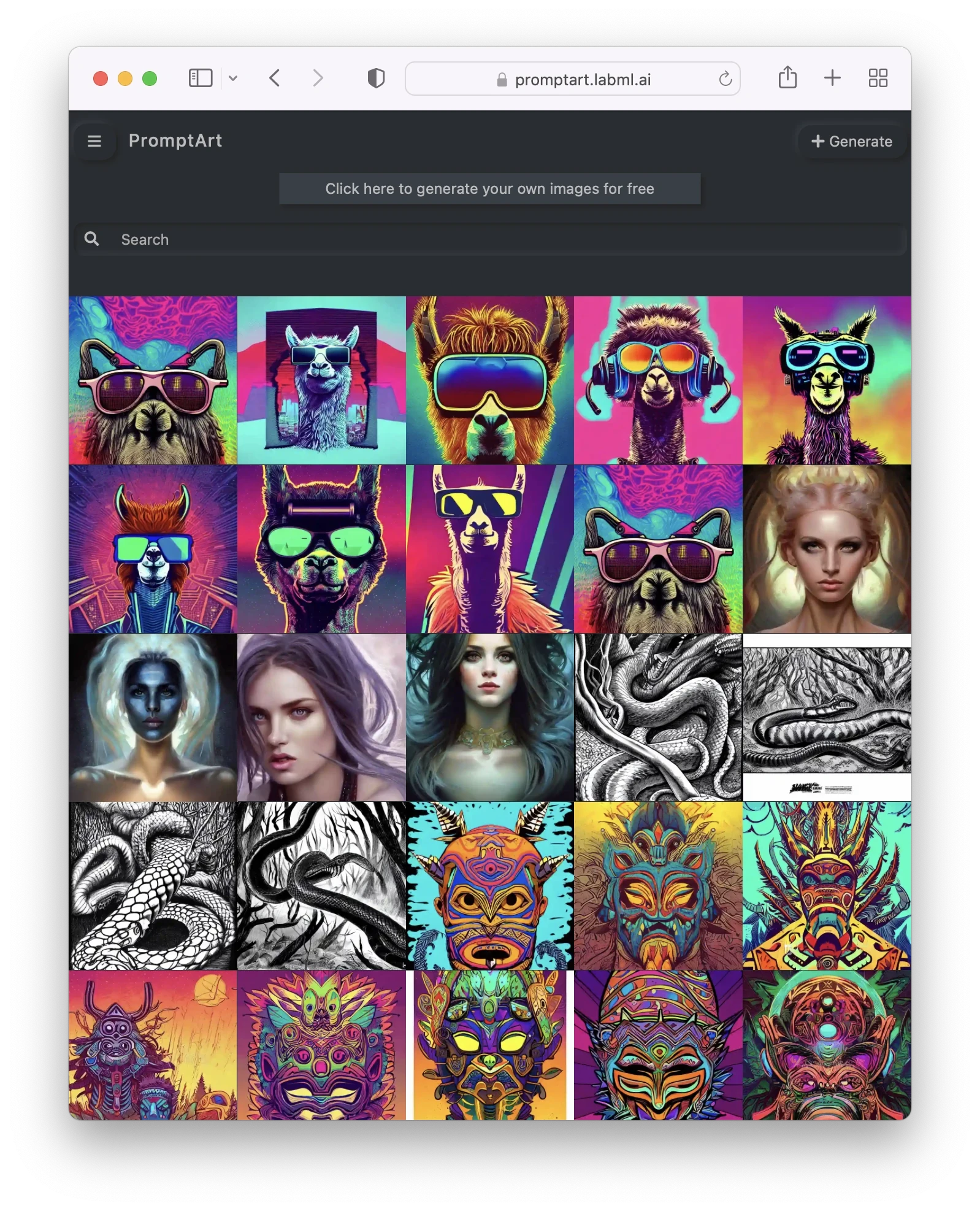

PromptArt

We have deployed a stable diffusion based image generation service at promptart.labml.ai

Latent Diffusion Model

The core is the Latent Diffusion Model. It consists of:

- AutoEncoder

- U-Net with attention

We have also (optionally) integrated Flash Attention into our U-Net attention which lets you speed up the performance by close to 50% on an RTX A6000 GPU.

The diffusion is conditioned based on CLIP embeddings.

Sampling Algorithms

We have implemented the following sampling algorithms:

- Denoising Diffusion Probabilistic Models (DDPM) Sampling

- Denoising Diffusion Implicit Models (DDIM) Sampling

Example Scripts

Here are the image generation scripts:

- Generate images from text prompts

- Generate images based on a given image, guided by a prompt

- Modify parts of a given image based on a text prompt

Utilities

util.py

defines the utility functions.