用于去噪扩散概率模型 (DDPM) 的 U-Net 模型

这是一个基于 U-Net 的模型,用于预测噪声。

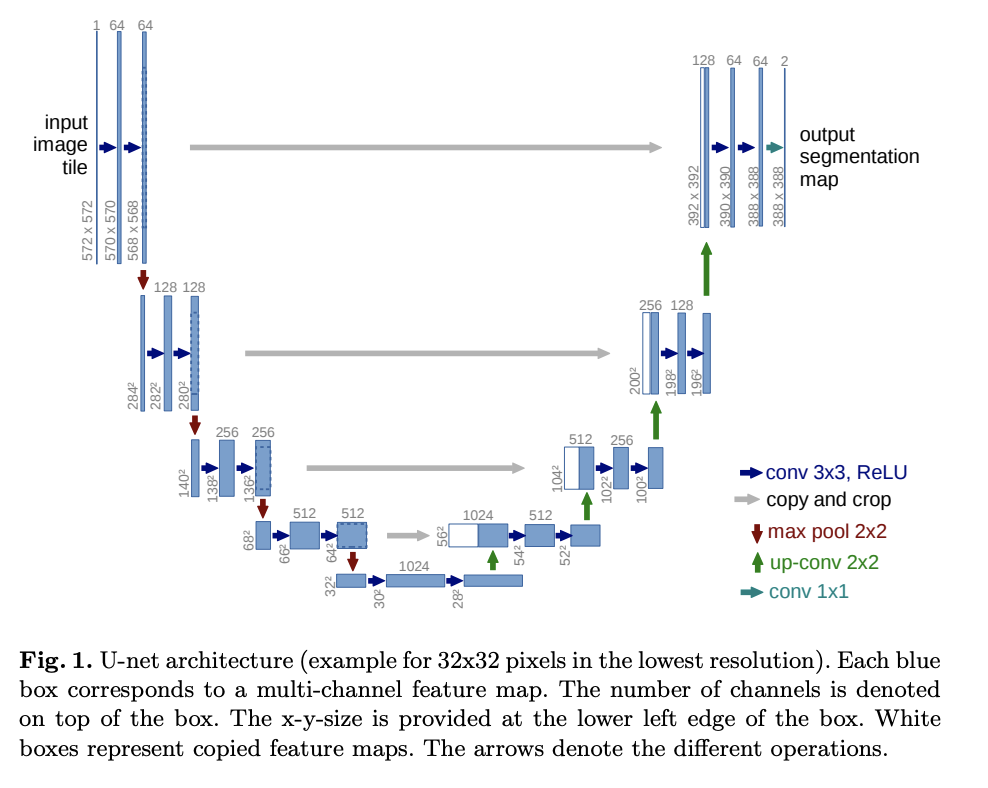

U-Net 是从模型图中的 U 形中获取它的名字。它通过逐步降低(减半)要素图分辨率,然后提高分辨率来处理给定的图像。每种分辨率都有直通连接。

此实现包含对原始 U-Net(残差块、多头注意)的大量修改,还添加了时间步长嵌入。

24import math

25from typing import Optional, Tuple, Union, List

26

27import torch

28from torch import nn

29

30from labml_helpers.module import Module33class Swish(Module):40 def forward(self, x):

41 return x * torch.sigmoid(x)嵌入用于

44class TimeEmbedding(nn.Module):n_channels是嵌入中的维数

49 def __init__(self, n_channels: int):53 super().__init__()

54 self.n_channels = n_channels第一个线性层

56 self.lin1 = nn.Linear(self.n_channels // 4, self.n_channels)激活

58 self.act = Swish()第二个线性层

60 self.lin2 = nn.Linear(self.n_channels, self.n_channels)62 def forward(self, t: torch.Tensor):72 half_dim = self.n_channels // 8

73 emb = math.log(10_000) / (half_dim - 1)

74 emb = torch.exp(torch.arange(half_dim, device=t.device) * -emb)

75 emb = t[:, None] * emb[None, :]

76 emb = torch.cat((emb.sin(), emb.cos()), dim=1)使用 MLP 进行转型

79 emb = self.act(self.lin1(emb))

80 emb = self.lin2(emb)83 return emb86class ResidualBlock(Module):in_channels是输入通道的数量out_channels是输入通道的数量time_channels是时间步 () 嵌入中的通道数n_groups是用于组标准化的组数dropout是辍学率

94 def __init__(self, in_channels: int, out_channels: int, time_channels: int,

95 n_groups: int = 32, dropout: float = 0.1):103 super().__init__()组归一化和第一个卷积层

105 self.norm1 = nn.GroupNorm(n_groups, in_channels)

106 self.act1 = Swish()

107 self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=(3, 3), padding=(1, 1))组归一化和第二个卷积层

110 self.norm2 = nn.GroupNorm(n_groups, out_channels)

111 self.act2 = Swish()

112 self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=(3, 3), padding=(1, 1))如果输入通道的数量不等于输出通道的数量,我们必须投影快捷方式连接

116 if in_channels != out_channels:

117 self.shortcut = nn.Conv2d(in_channels, out_channels, kernel_size=(1, 1))

118 else:

119 self.shortcut = nn.Identity()用于时间嵌入的线性层

122 self.time_emb = nn.Linear(time_channels, out_channels)

123 self.time_act = Swish()

124

125 self.dropout = nn.Dropout(dropout)x有形状[batch_size, in_channels, height, width]t有形状[batch_size, time_channels]

127 def forward(self, x: torch.Tensor, t: torch.Tensor):第一个卷积层

133 h = self.conv1(self.act1(self.norm1(x)))添加时间嵌入

135 h += self.time_emb(self.time_act(t))[:, :, None, None]第二个卷积层

137 h = self.conv2(self.dropout(self.act2(self.norm2(h))))添加快捷方式连接并返回

140 return h + self.shortcut(x)150 def __init__(self, n_channels: int, n_heads: int = 1, d_k: int = None, n_groups: int = 32):157 super().__init__()默认d_k

160 if d_k is None:

161 d_k = n_channels归一化层

163 self.norm = nn.GroupNorm(n_groups, n_channels)查询、键和值的投影

165 self.projection = nn.Linear(n_channels, n_heads * d_k * 3)用于最终变换的线性层

167 self.output = nn.Linear(n_heads * d_k, n_channels)缩放点产品注意力

169 self.scale = d_k ** -0.5171 self.n_heads = n_heads

172 self.d_k = d_kx有形状[batch_size, in_channels, height, width]t有形状[batch_size, time_channels]

174 def forward(self, x: torch.Tensor, t: Optional[torch.Tensor] = None):t

未使用,但它保留在参数中,因为要与注意层函数签名匹配ResidualBlock

。

181 _ = t塑造身材

183 batch_size, n_channels, height, width = x.shape改x

成形状[batch_size, seq, n_channels]

185 x = x.view(batch_size, n_channels, -1).permute(0, 2, 1)获取查询、键和值(串联)并将其调整为[batch_size, seq, n_heads, 3 * d_k]

187 qkv = self.projection(x).view(batch_size, -1, self.n_heads, 3 * self.d_k)拆分查询、键和值。他们每个人都会有形状[batch_size, seq, n_heads, d_k]

189 q, k, v = torch.chunk(qkv, 3, dim=-1)计算缩放的点积

191 attn = torch.einsum('bihd,bjhd->bijh', q, k) * self.scale顺序维度上的 Softmax

193 attn = attn.softmax(dim=2)乘以值

195 res = torch.einsum('bijh,bjhd->bihd', attn, v)重塑为[batch_size, seq, n_heads * d_k]

197 res = res.view(batch_size, -1, self.n_heads * self.d_k)变换为[batch_size, seq, n_channels]

199 res = self.output(res)添加跳过连接

202 res += x改成形状[batch_size, in_channels, height, width]

205 res = res.permute(0, 2, 1).view(batch_size, n_channels, height, width)208 return res211class DownBlock(Module):218 def __init__(self, in_channels: int, out_channels: int, time_channels: int, has_attn: bool):

219 super().__init__()

220 self.res = ResidualBlock(in_channels, out_channels, time_channels)

221 if has_attn:

222 self.attn = AttentionBlock(out_channels)

223 else:

224 self.attn = nn.Identity()226 def forward(self, x: torch.Tensor, t: torch.Tensor):

227 x = self.res(x, t)

228 x = self.attn(x)

229 return x232class UpBlock(Module):239 def __init__(self, in_channels: int, out_channels: int, time_channels: int, has_attn: bool):

240 super().__init__()输入之in_channels + out_channels

所以有,是因为我们将 U-Net 前半部分相同分辨率的输出连接起来

243 self.res = ResidualBlock(in_channels + out_channels, out_channels, time_channels)

244 if has_attn:

245 self.attn = AttentionBlock(out_channels)

246 else:

247 self.attn = nn.Identity()249 def forward(self, x: torch.Tensor, t: torch.Tensor):

250 x = self.res(x, t)

251 x = self.attn(x)

252 return x255class MiddleBlock(Module):263 def __init__(self, n_channels: int, time_channels: int):

264 super().__init__()

265 self.res1 = ResidualBlock(n_channels, n_channels, time_channels)

266 self.attn = AttentionBlock(n_channels)

267 self.res2 = ResidualBlock(n_channels, n_channels, time_channels)269 def forward(self, x: torch.Tensor, t: torch.Tensor):

270 x = self.res1(x, t)

271 x = self.attn(x)

272 x = self.res2(x, t)

273 return x按比例放大要素地图

276class Upsample(nn.Module):281 def __init__(self, n_channels):

282 super().__init__()

283 self.conv = nn.ConvTranspose2d(n_channels, n_channels, (4, 4), (2, 2), (1, 1))285 def forward(self, x: torch.Tensor, t: torch.Tensor):t

未使用,但它保留在参数中,因为要与注意层函数签名匹配ResidualBlock

。

288 _ = t

289 return self.conv(x)按比例缩小要素地图

292class Downsample(nn.Module):297 def __init__(self, n_channels):

298 super().__init__()

299 self.conv = nn.Conv2d(n_channels, n_channels, (3, 3), (2, 2), (1, 1))301 def forward(self, x: torch.Tensor, t: torch.Tensor):t

未使用,但它保留在参数中,因为要与注意层函数签名匹配ResidualBlock

。

304 _ = t

305 return self.conv(x)U-Net

308class UNet(Module):image_channels是图像中的通道数。对于 RGB。n_channels是初始特征图中我们将图像转换为的通道数ch_mults是每种分辨率下的通道编号列表。频道的数量是ch_mults[i] * n_channelsis_attn是一个布尔值列表,用于指示是否在每个分辨率下使用注意力n_blocks是每种分辨UpDownBlocks率的数字

313 def __init__(self, image_channels: int = 3, n_channels: int = 64,

314 ch_mults: Union[Tuple[int, ...], List[int]] = (1, 2, 2, 4),

315 is_attn: Union[Tuple[bool, ...], List[bool]] = (False, False, True, True),

316 n_blocks: int = 2):324 super().__init__()分辨率数量

327 n_resolutions = len(ch_mults)将图像投影到要素地图中

330 self.image_proj = nn.Conv2d(image_channels, n_channels, kernel_size=(3, 3), padding=(1, 1))时间嵌入层。时间嵌入有n_channels * 4

频道

333 self.time_emb = TimeEmbedding(n_channels * 4)U-Net 的前半部分-分辨率降低

336 down = []频道数量

338 out_channels = in_channels = n_channels对于每种分辨率

340 for i in range(n_resolutions):此分辨率下的输出声道数

342 out_channels = in_channels * ch_mults[i]添加n_blocks

344 for _ in range(n_blocks):

345 down.append(DownBlock(in_channels, out_channels, n_channels * 4, is_attn[i]))

346 in_channels = out_channels除最后一个分辨率之外的所有分辨率都向下采样

348 if i < n_resolutions - 1:

349 down.append(Downsample(in_channels))组合这组模块

352 self.down = nn.ModuleList(down)中间方块

355 self.middle = MiddleBlock(out_channels, n_channels * 4, )U-Net 的后半部分-提高分辨率

358 up = []频道数量

360 in_channels = out_channels对于每种分辨率

362 for i in reversed(range(n_resolutions)):n_blocks

以相同的分辨率

364 out_channels = in_channels

365 for _ in range(n_blocks):

366 up.append(UpBlock(in_channels, out_channels, n_channels * 4, is_attn[i]))减少信道数量的最终区块

368 out_channels = in_channels // ch_mults[i]

369 up.append(UpBlock(in_channels, out_channels, n_channels * 4, is_attn[i]))

370 in_channels = out_channels除最后一个以外的所有分辨率向上采样

372 if i > 0:

373 up.append(Upsample(in_channels))组合这组模块

376 self.up = nn.ModuleList(up)最终归一化和卷积层

379 self.norm = nn.GroupNorm(8, n_channels)

380 self.act = Swish()

381 self.final = nn.Conv2d(in_channels, image_channels, kernel_size=(3, 3), padding=(1, 1))x有形状[batch_size, in_channels, height, width]t有形状[batch_size]

383 def forward(self, x: torch.Tensor, t: torch.Tensor):获取时间步长嵌入

390 t = self.time_emb(t)获取图像投影

393 x = self.image_proj(x)h

将以每种分辨率存储输出以进行跳过连接

396 h = [x]U-Net 的上半年

398 for m in self.down:

399 x = m(x, t)

400 h.append(x)中间(底部)

403 x = self.middle(x, t)U-Net 的下半场

406 for m in self.up:

407 if isinstance(m, Upsample):

408 x = m(x, t)

409 else:从 U-Net 的前半部分获取跳过连接并连接

411 s = h.pop()

412 x = torch.cat((x, s), dim=1)414 x = m(x, t)最终归一化和卷积

417 return self.final(self.act(self.norm(x)))